AI today is helping us re-imagine our future in this world. It is helping us unlock our true potential and imagine the unimagined. In this interesting read, Kiran will take you through a technical rundown of how AI tech has evolved and how it can eventually help us lead a sustainable life.

On a busy Monday morning at the peak of the second Covid wave, I dialed into a client meeting from home when a drilling noise began. Possibly, it was some neighbour carrying out urgent repairs. The sound, thankfully, faded into the background after a few minutes, but from thereon, a part of me was always on alert. In case that drilling sound manifested itself again, I was ready to mute my presentation and send a real-time signal to a colleague who was also in the meeting so that she could take over. This incident triggered a faint memory of rainforests and the efforts of a man named Topher White, who set out to invent a low-cost monitoring system to protect them!

Back in 2011, when White was volunteering at a gibbon reserve, there was a major issue with illegal logging. The account had a few guards, but it was hard to monitor the vast forests using traditional methods, which involved patrolling the jungle continuously while attentively listening for a particular sound frequency—the noise made by electric chainsaws which were used to fell trees. White resolved to do something about it.

Sound AI is an insanely exciting subject. Say, we pluck the string of an acoustic guitar. The string’s vibration, amplified by the wooden top plate, causes air molecules around it to vibrate. These knock into neighbouring molecules, which in turn start shaking. These cause variations in air pressure, and we call this a sound wave. When these vibrations reach our ears, we “hear” things. When these waves strike our delicate eardrum, they cause it to vibrate. This, in turn, causes ripples inside the fluid in our ears. There are hair-like cells present there which then convert these into nerve impulses which are then processed by our brain, and we “hear” sounds.

So, the sound that humans perceive is not the “real” sound in that sense. It is merely our interpretation of the actual sound wave. If an ear and brain were not around when a cracker explodes, there would be no “sound”! Now consider the opposite situation.

It seems to have evolved the ability to capture changes in vibrations across a range of frequencies over an entire time, archive it and easily replay it when necessary. While it would be interesting to speculate on how the brain achieves this miracle, let us analyze how music is traditionally stored.

Sound waves are no different from the waves on the beach or the rope waves generated in the gym. They have an amplitude and a frequency. The amplitude is simply the maximum displacement from the centre, and the frequency is the number of waves that pass a fixed point in each interval of time. In the golden era of cassettes, microphones typically had tiny magnets that vibrated with the movements of air, producing an electrical current. This current caused (other) magnetic particles on the tape to align in proportion to the strength of the signal, thereby recording sound in an analogue fashion. But nowadays, we digitize sound by ‘sampling’ the power of the wave at short intervals of time. CDs store music that is sampled 44,100 times each second. Such a 1-second sound clip can be stored in a NumPy array of 44.1K.

Every sound in this universe is either an individual sound wave or a combination of sound waves. E.g., the sound of music in an orchestra is just the sum of the sound waves of all the instruments being played. It would be much simpler to manipulate sound if we could find a way to “unblend” it into respective constituent frequencies—like how a prism unblends light.

Each filter corresponds to a different frequency. Imagine multiplying the original sound with a wave of a specific frequency. Closer the match, the higher the resulting summed product value. By measuring how strong the output of each filter is, we get an idea of the sound’s key frequencies.

Our ears have a natural FT machine built-in. When doctors listen to heartbeats via stethoscope, (at least) 2, different sounds can be heard. Heart conditions can be detected by simply listening to the heart sound (and internally breaking it up into individual frequency constituents). While our ears do this in real-time, traditional FT was time-consuming and impractical for a long time. Things began to change rapidly when mathematicians at Princeton developed a high-speed algorithm to do FT, significantly reducing computation time. They gave it the simple name of Fast Fourier Transform (FFT). Now one could generate FTs in real time!

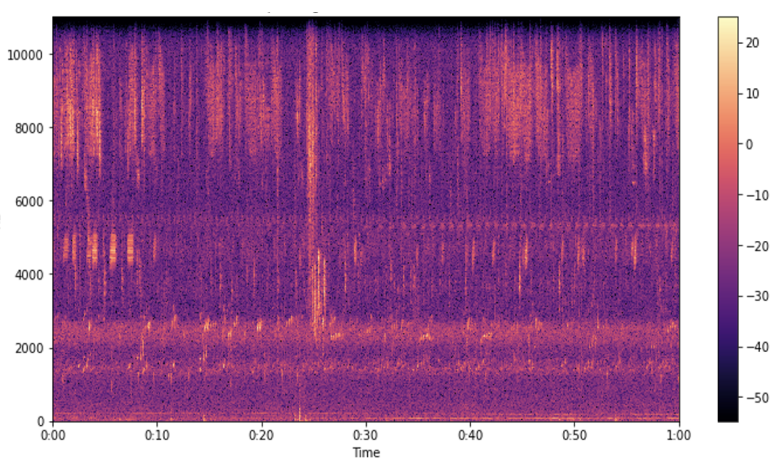

FTs are like a snapshot in time. We get a glimpse of sound & its constituents at a particular moment. It would be nicer to see how these sound constituents change over time. It is possible to do this using STFT (Short-Time Fourier Transform), a sliding-frame FFT. We first take the signal and split it into overlapping slices (the overlapping is done to capture the continuity). We then apply FFT to each piece. We can now plot the (logarithm of) result—with time along the horizontal axis, frequency along the vertical axis, and pixel colour showing the intensity of each frequency. The resulting heat map is an image called a spectrogram.

The time-series data composed of individual FTs can also be directly analyzed by an AI program. E.g., we could detect the rhythmic frequency of a chainsaw if present directly from the time-series discrete data instead of stitching together FTs and converting them into a spectrogram image. But this is done only sometimes. It is far more convenient for the AI to analyze images rather than raw data. The reason for that lies in 2012 when a student named Alex Krizhevsky and a colleague under the guidance of Geoff Hinton competed in the ImageNet competition using an unconventional architecture that beat every other competitor by a considerable margin. The architecture (later) named AlexNet harnessed CNN’s (Convolutional Neural Net) effectively and advanced the field of computer vision by leaps and bounds. It, therefore, made sense to use these ready-made SOTA architectures to analyze spectrogram images. This arrangement worked surprisingly well… considering that CNNs, by nature, are translation equivariant. For CNNs, a ‘face’ is a ‘face’ irrespective of where it appears on the image. Spectrograms are not invariant in that sense. The y-axis is the frequency. Hence, a pattern at the bottom of the spectrogram is not equivalent to the way at the top. Yet, they work far better than time-series techniques. Perhaps, the sheer amount of innovation that has gone into developing CNN architectures and the scope for transfer learning seems to have helped.

The spotlight shifted away from image processing towards Natural Language Processing (NLP) with the publication of a 2017 paper by Vaswani/team where they harnessed the power of transformer architecture. The following 4 years saw tremendous interest in NLP, but starting in 2020, Vision transformers were introduced. We can now leverage the robust transformer architecture in Computer Vision instead of CNNs with better results. The SOTA in vision as of 2021 was the Swin transformer by Microsoft. Exciting times are back in Vision, and like before, the field of Sound AI can piggyback on this bandwagon. Transformers in vision are more apt for Sound AI than CNNs because they are generic and have significantly lower inductive biases (like translation equivariance), unlike CNN. This should make spectrogram analysis more effective.

We can also use Fourier’s equations to “re-blend”, i.e., recreate the original sound from its constituent frequencies. We could choose to omit specific frequencies when re-blending. Had I captured the audio of my client meeting that day, I could have used FTs to splice the sound into its constituent frequencies, isolate the sound frequency made by my friendly neighbour’s driller, and re-blend all frequencies barring that one. I would end up re-creating the original sounds of the meeting without the drilling sound. Doing that in real time would mean we could take client calls with a driller in full blast behind us without the client becoming aware of it.

Loved what you read?

Get practical thought leadership articles on AI and Automation delivered to your inbox

Loved what you read?

Get practical thought leadership articles on AI and Automation delivered to your inbox

The sight and sounds of a rainforest are like a pleasant assault on our senses. If listening to it gives you an instant high, then hearing the faint monotonous sound of a chainsaw intermingled with it might bring a look of consternation on your face. Illegal logging strips this planet of millions of acres of natural forest annually. Thanks to the development of technology and the will of people like Topher, we can now develop automated solutions to detect and flag unusual sounds when listening to the beautiful symphony sung by our rainforests.

Disclaimer Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the respective institutions or funding agencies