Lawmakers across the globe aim to protect protection against discrimination in lending decisions through a number of local and federal regulations. In the US, for instance, the Equal Credit Opportunity Act (ECOA) makes it unlawful for credit applicants to be discriminated based on factors such as race, color, religion, national origin, sex, marital status, age, or assistance from any public programs – traditional practices, and standard operating procedures prevalent today.

There is no working definition of bias under the act, only an explanation of its provisions, which can often fall short of ensuring fairness. Lately, however, the evolution of technology has offered hopes of change. AI, specifically, with its ability to learn, evolve, and compute rapidly, is capable of eliminating bias that may be attributed to subjectivity in the (manual) decision-making process. It turns out that AI can also get biased. This calls for thorough due diligence, comprehensive checks and balances to make AI systems fair.

What Causes Bias?

AI is not born with biases. We teach it how to discriminate. AI is based on mathematical models that try to mimic the behavior learned from a dump of historical data to make predictions about unseen data. Humans learn by observing what’s happening around them. AI algorithms learn from the patterns in the data. At the risk of oversimplifying things, there is a tempting analogy to describe the two processes:

A human child exposed continuously to discriminatory behavior may not know any better. An AI algorithm will learn the biases that exist in the training data. The algorithms are fundamentally free from any prejudices, as is widely feared and believed. The point I am making here is that the problem lies in the dataset we provide to the AI algorithm and, hence, the solution lies in fixing the bias in the dataset rather than fixing the algorithm.

I believe it’s safe to say that all modern banks and lenders are fully aware of the risks involved and are taking measures to curb bias at different levels. The first step in solving for bias is the recognition that it’s not a trivial problem to solve. If it were, we wouldn’t be talking about it. It requires a systematic framework that detects and eliminates bias while providing incremental improvements. It is worth noting that some of the following measures are commonly adopted to prevent bias, but there continues to be no comprehensive approach in place. These methods include:

Restriction on the use of protected variables (unawareness):

An excellent place to completely do away with the prohibited attributes when training the AI model. This approach becomes the first level of defense and is commonly prescribed by most anti- discriminatory frameworks.

AI exhibits certain advantages in this regard, whereas with human decision-makers, it is impossible to prove to auditors that any decision was utterly unaffected by the protected factors easily observed during any social interaction. It is possible to systematically and deterministically show the exclusion of the protected variable in the creation of the AI-based lending approval model.

While the legal and model validation teams in your organization may be satisfied with this simple exclusion, since it is consistent with the notion of disparate treatment as defined in ECOA, 1964, real-life results may not be as simple.3

Removing variables highly correlated with the protected variables:

Data is an accurate representation of the nature of our society. The discrimination, and resulting fallout, in our history, has been extensively discussed and documented. Every country, subculture, and individual, however, tends to have its version of discrimination and attributes personal to their judgment. By extension, there may be variables other than the protected variables in the data with encoded information about the protected variables.

For example, a part of the city inhabited by a minority class with a disproportionate number of refused loan applications will influence AI model predictions.

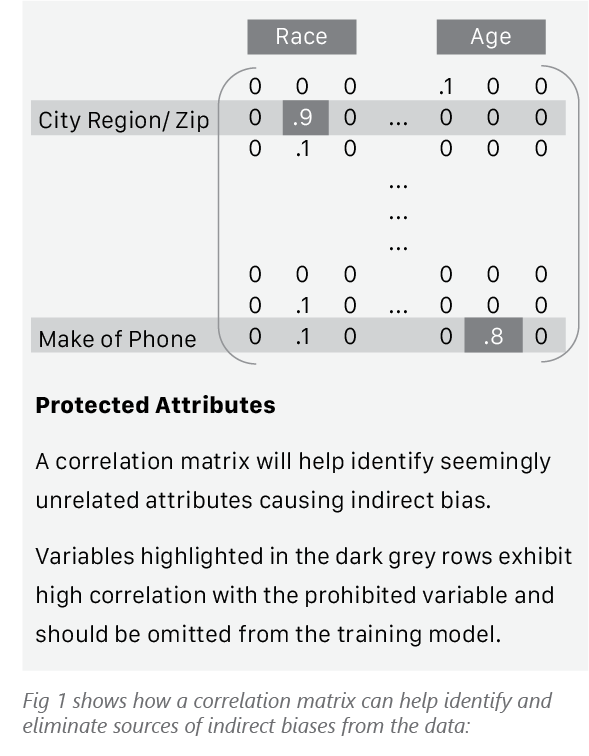

The indirect influencers of the above kind are also easy to detect by a simple statistical correlation measure. The idea is to identify the variables that may have a high correlation value with any of the protected variables and diligently remove them from the list of approved elements for AI model training.

A correlation matrix, as shown below, will help to identify seemingly unrelated attributes causing indirect bias quickly. This matrix is just a tabular arrangement of correlation measures between the prohibited variables (columns) and the other variables in the rows. E.g., the high correlation value of 0.9 between ‘Race’ and ‘City/Region’ indicates that certain cities/regions are inhabited predominantly by people belonging to a specific community/race. Hence, the usage of the ‘ZIP Code’ will bring in the inherent bias and cause the model to discriminate against one particular race.

Quantifying the Bias

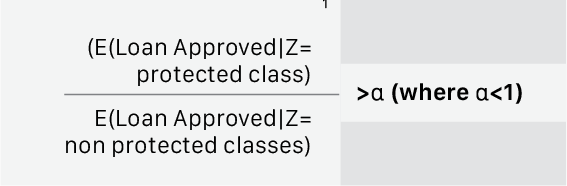

So, most legal teams are satisfied with the first technique, and we have offered an additional step as well. At this point, we may hope against all the odds to have eliminated entire biases in the AI model created. To ascertain that the bias is within a certain permissible threshold, we would need a formal metric. The threshold ‘a’ will have to be selected with extreme care such that any discrepancy beyond ‘a’ may be safe to consider noise. One such parameter could be expressed as below (for a model that approves or rejects a loan application; protected class = attribute protected under anti-discriminatory laws).

In other words, we would mainly like to keep the ratio of expectations of loan approval through the model under test, for a protected attribute/minority class concerning that of the majority class/unprotected above a certain threshold to eliminate the likelihood of bias in the model. The generally adopted guideline comes from the four-fifth rule or 80% rule, which sees a selection rate for any particular group less than four-fifths of that with the highest selection rate as evidence of adverse impact.

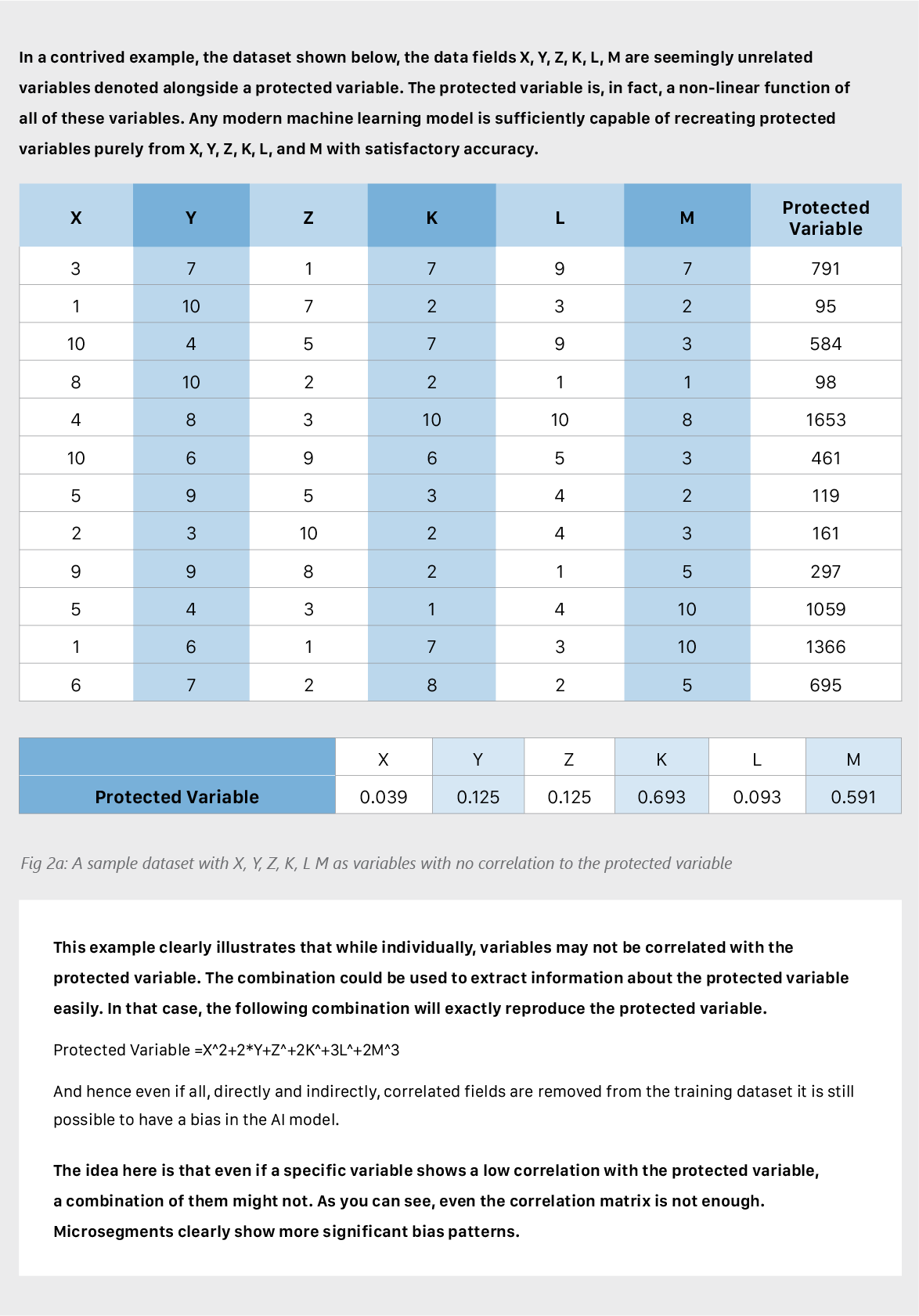

At this point, after diligently executing step 1 and 2, and carefully selecting ‘a’, we should be convinced to have removed all possible bias. This delusion is probably the biggest stumbling block in this exercise. The following point will conclusively illustrate why it is still possible to detect bias in the ‘under test’ model.

To put this in perspective, a bank might provide multiple variables to the AI modelers. For simplicity’s sake, let’s consider only two of such variables. Area of residence and brand of phone (let’s call the phone Swift – X). We know that male to female ratio in the world population is 101 to 100 (about 50-50). Now while Swift – X might have an equal ratio of male and female buyers globally, in a particular country, the ratio might be skewed towards men (and this maybe due to factors such as social structure, design, price, etc). in that country. AI can use a combination of these two variables to learn the gender of the applicant and corrupt the decision with gender bias. This makes the entire model biased because the model knows that if you have Swift – X and you live in a particular country; you are more likely to be a male.

Hopefully, this provides a good flavor of why detecting bias is not as trivial as it seems on the face of it. The laws need to change, and people should start moving to new methods. Hence it becomes imperative to think outside the box and apply non-conventional techniques to remove bias. We will quickly touch upon a few of those techniques, currently useful in labs but sure to enter the mainstream, in this section of the article.

Reweighing – A Contrarian View

It can be easily shown that including protected factors rather than excluding them in building the machine learning algorithms may lead to less discriminatory outcomes. Including these variables in the training dataset present an opportunity to adjust weights on these fields to negate the effect of the bias inducing factors, instead of blindsiding them to the supposed causes of bias entirely.

Although eliminating race, gender, or other characteristics from the model doesn’t produce a quick fix, algorithmic decision-making does offer a different kind of opportunity to reduce discrimination. Instead of trying to untangle how an algorithm makes decisions, regulators can focus on whether it leads to fair outcomes and find more effective ways of dealing with data instead of rudimentary fixes.

Mixing is good!

It’s important to understand that there may not be a universal solution to the problem of bias. The complex nature of the problem makes it imperative for the data scientist to cautiously pick one or mix from the buffet of available techniques. Adding a penalty is a popular technique to curb learning from the noise in data (overfitting). Extending the idea and optimizing the cost function over an additional ‘discrimination aware penalty term’ could help regularize bias. In another technique, the predictions from the AI model could be appropriately recalibrated to normalize the bias detected in the final output predictions. The calibration could nudge the output in a measured step to slightly favor the unprivileged group so that the final output is within the permissible skewness range.

Finally, keeping it Transparent (Explainable Models)

The route to ubiquitous, trustworthy, and accountable AI implementation is complex and challenging, but it also presents a marvelous opportunity. Once solved, AI would not just incrementally improve but exponentially augment the quality of thinking across several areas. In addition to testing ways to eradicate bias, organizations must consider ways to integrate transparency and accountability into their AI platforms. Explainable and interpretable AI, where every parameter behind a decision can be viewed, evaluated, and assessed, will be integral to furthering trust both within and outside an organization. Once they have a way to understand AI output, companies can course-correct their approach, iteratively arriving at the best AI deployment for their business and customer needs.

Addressing AI bias is no straightforward challenge, but there are steps organizations can take towards making fairer and more explainable decisions. With EdgeVerve’s FinXEdge suite of cognitive connected business applications, enterprises can generate clear audit trails for every recommendation, including factors determining outcomes, while also improving results through weighted bias decision-making.