Summary

Creating labeled training sets is the most time-consuming and expensive part of applying Machine Learning. Solving this is a crucial foundational block towards scaling enterprise adoption of AI. Read this article to know how Data Programming can prove to be a much desired solution.

Today, large volumes of training data are hand-labeled by humans like ImageNet, an image database organized according to the WordNet hierarchy. This project, where $300K was spent on Labeling 14 million images, was instrumental advancing computer vision and deep learning research. ReportLinkerⅰ estimates that the total spend by organizations globally in dataset collection and labeling will be $3.5 billion by 2026. At Infosys, we have estimated that 25-60% of implementation cost in ML projects is spent on manual labeling and validation.

If these figures are not staggering enough, they are set to get even higher if you consider this: The nature of Machine Learning algorithms is becoming increasingly sophisticated. These algorithms take more features in the training dataset than earlier considered. For example, in a key-value pair extraction from a document, the training data would traditionally have the key name, its datatype, and location. Multimodal ML algorithms can consume rich text information for better prediction, which means that all training data needs to be recreated, including the new input features.

Another example is self-driving cars that have inputs from a camera and sensors. Future inputs will be from LiDAR technology. The implication is that the features fed will change, and hence the training data will have to be created all over again. This needs to be addressed now, with an efficient, shop-usable, and faster way to create datasets that enable us to deploy the Machine Learning models.

Leveraging existing domain knowledge and skills

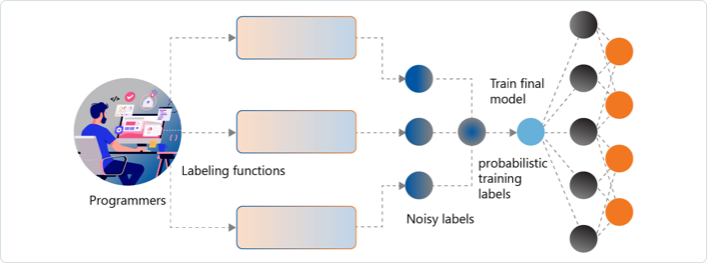

Software programming is the key strength of software teams in any enterprise. Data programming hinges on the creation of ML by programmatic rules or heuristics called “labeling functions.” The team of researchers from Stanford coined the term Data Programming, also created this open source project for it. This project was called Snorkel. While labeling functions are given a smooth starting point, we cannot expect them to create high-quality Machine Learning data. The labeled data may have incorrect or missing labels called noise. The extent of noise is characterized by the logic of the labeling function. When logic creates noise, we refer to it as a weak signal. Here is one example of a weak signal — think of the hashtags added in Instagram, Twitter, or other social media. What if you were analyzing the Twitter handle of product support or market sentiment of a company using the hashtags? Many hashtags used in social media are not entirely representative, or clean of biases to be used for building an accurate sentiment analysis model. But they can still serve as preliminary data.

-

The magic wand in data programming is about thinking beyond these labeling functions

It is an approach to how these multiple weak signals are combined to give better coverage and accuracy. The key challenge is the ability to resolve conflicting labels from multiple labeling sources. How do we know what is accurate without access to ground truth? While a naïve and common method is to actively bring human-in-the-loop for every conflict resolution and assignment of the final label, it defeats the purpose of “true” data programming. So, what are the state-of-the-art models we can use for this purpose?

The problem of conflicting labels is also a scenario that emerges in human-labeled data. Often, when a worker is onboarded into the data annotation platform, a test task designed with known answers is given to all the annotators to evaluate. Annotators who don’t perform are removed from the platform. Further, each annotator is awarded a “worker trust score” based on the performance. Each time there is a conflict, instead of an unweighted majority vote to resolve conflicts, they consider the worker trust scores as weights. In our context, the labeling functions are analogous to “virtual annotators.” The concept of sprinkling test data with known answers (or source of truth) to evaluate a labeling function’s trust score could be done if we had a sizeable source of truth or constant human quality assurance. However, we work with a limited source of truth and no human quality assurance of the final label assigned. Therefore, addressing this is key to the success of data programming.

-

To address these issues, data programming methods resort to using Generative Adversarial Networks (GANs)

The GAN network has a generative and discriminative network and is a generalization of a Turing test. In a Turing test, a human evaluator converses with an unseen talker, trying to understand whether it is a machine or a human. If the human evaluator cannot distinguish whether the unseen talker is a human or a machine, then the Turing test is said to have been passed. In our context, we use the generative model to utilize the consensus and conflicts between other labeling functions to estimate the accuracy of the labeling function and their correlation to other labeling functions. The resulting probabilistic labels are then used to train a discriminative model, which can be compared with the human evaluator. This discriminator network learns to generalize beyond the information represented in the labeling functions. With time and the addition of unlabeled data, it has been proven by researchers [at Snorkel] that discriminative models can find patterns beyond what is expressed in the labeling functions.

Loved what you read?

Get practical thought leadership articles on AI and Automation delivered to your inbox

Loved what you read?

Get practical thought leadership articles on AI and Automation delivered to your inbox

AI at Scale

Data programming effectively creates Machine Learning data by minimizing human labeling and the associated costs. They yield faster data creation and higher quality of labeled data, forming the foundational block to building AI at scale in enterprises.